Imagine a financial analyst bot that not only retrieves real-time stock updates but also advises on portfolio shifts—all without human prompting. That’s the kind of intelligence LangChain is enabling. Moreover, the rise of Generative AI has made it necessary to build agents that can reason, interact dynamically, and process multiple inputs in a coherent workflow. LangChain is a powerhouse framework in this domain that offers the abstraction and modularity required to create AI agents that can fetch, create, and act in real time.

Understanding LangChain and Why It’s Turning Heads

LangChain is an open-source system aimed at making it easier to integrate large language models (LLMs) with other external data sources, memory elements, and reasoning functions. It gives the user the necessary tools to build complex AI applications that go beyond mere prompt-response systems. At its core, LangChain enables:

- Prompt Engineering: Structured and dynamic prompt management for optimized LLM interactions.

- Memory Integration: Stateful agents that retain context across interactions.

- Tool and API Connectivity: Seamless integration with databases, APIs, and computational tools.

- Reasoning and Planning: Multi-step thought processes and decision trees for complex problem-solving.

- Retrieval-Augmented Generation (RAG): Enhancing responses by incorporating external knowledge sources.

LangChain plays well with OpenAI’s GPT series, Meta’s LLaMA models, Cohere’s command R+, and open-source models like Falcon and Mistral, making it a flexible choice for enterprise applications.

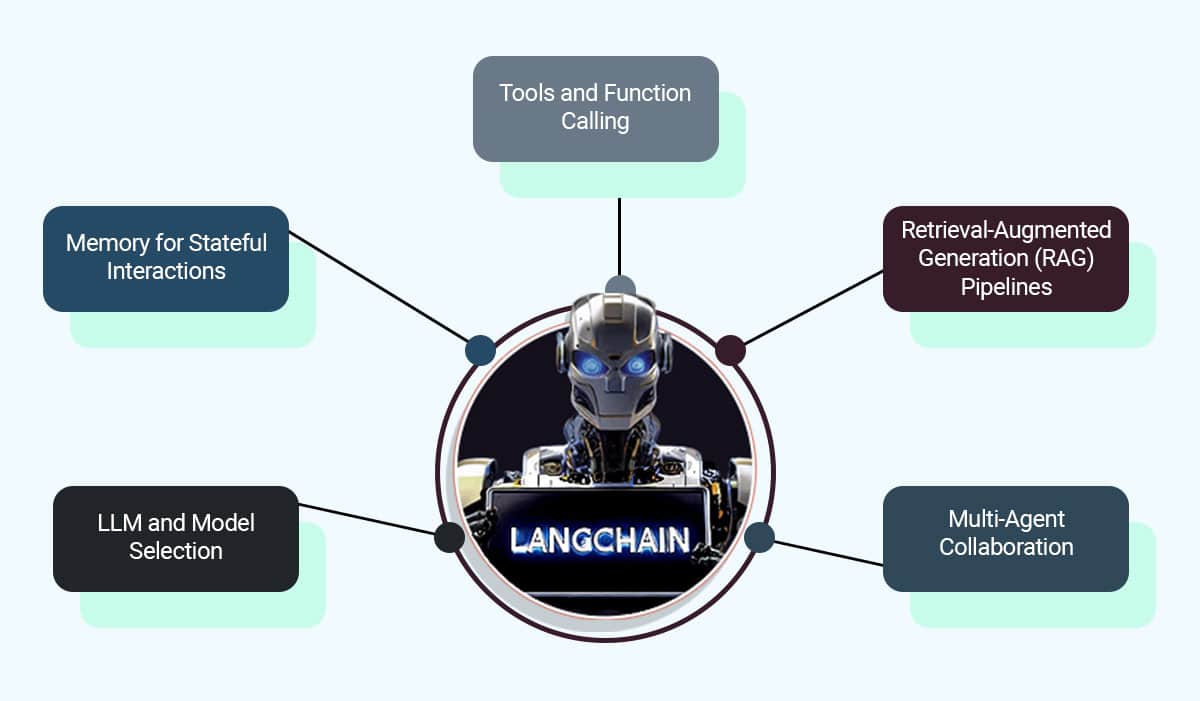

How LangChain Structures a Gen AI Agent

A Generative AI agent built with LangChain follows a structured approach as follows.

1. LLM and Model Selection – Choosing the right model is the foundation. OpenAI’s GPT-4-turbo, Anthropic’s Claude, and open-source alternatives like Mixtral provide different advantages in cost, reasoning, and interpretability. LangChain allows developers to switch between models effortlessly based on latency, accuracy, and deployment needs.

2. Memory for Stateful Interactions – GenAI agents need to maintain context across conversations. LangChain’s ConversationBufferMemory, ConversationSummaryMemory, and VectorStoreRetrieverMemory make it possible for models to recall previous interactions, summarize the main points, and dynamically retrieve pertinent information.

3. Tools and Function Calling – LangChain agents interact with external tools via structured function calls. This allows integration with:

- Google Search API, Bing Search API (for real-time information retrieval)

- SQL and NoSQL Databases (for knowledge grounding)

- Wolfram Alpha (for computational queries)

- Python Execution Environment (for dynamic code execution)

- Custom APIs and Plugins (for business-specific functionalities)

4. Retrieval-Augmented Generation (RAG) Pipelines – LLMs alone have limitations when handling domain-specific data. By implementing RAG, LangChain allows GenAI agents to:

- Retrieve relevant data from vector databases like FAISS, Pinecone, ChromaDB

- Use embedding models like OpenAI’s text-embedding-ada-002 or Cohere’s embeddings

- Optimize responses by dynamically fetching up-to-date information

5. Multi-Agent Collaboration – LangChain enables multi-agent architectures in which various agents are proficient in activities such as research, summarization, and execution. The agents interact via structured workflows, allowing for more resilient AI-powered applications.

Real-World Applications of LangChain-Powered AI Agents

1. Conversational AI & Chatbots – By leveraging LangChain, developers can build stateful and intelligent chatbots that go beyond predefined responses. Integration with memory modules and APIs allows chatbots to provide:

- Personalized recommendations

- Multi-turn reasoning

- Transactional assistance (e.g., booking a flight, ordering food)

2. Automated Research Assistants – LangChain facilitates AI-driven research assistants that retrieve, summarize, and analyze papers. By hooking up to Google Scholar, ArXiv, and proprietary document libraries, these agents offer immediate insights across sectors such as finance, law, and healthcare.

3. Code Generation and Debugging – LangChain-powered agents can interact with codebases, suggest improvements, and even debug software by integrating with GitHub Copilot, OpenAI’s Codex, and LangSmith. This significantly accelerates development workflows.

4. Enterprise AI & RAG-Driven Customer Support – Businesses utilize LangChain for AI-powered customer service through integration with Salesforce, Zendesk, and bespoke ticketing systems. GenAI agents that come with RAG guarantee accurate, contextually informed, and dynamically created responses.

How VE’s AI/ML Engineers Use LangChain to Deliver Cutting-Edge Solutions

When it comes to building advanced AI agents with LangChain, the difference between an average implementation and an outstanding one lies in expertise, efficiency, and optimization. Engineers at VE bring years of experience in AI/ML development, leveraging LangChain to craft intelligent, scalable, and highly efficient AI solutions tailored to specific business needs.

- AI Agents That Fit the Industry – Each sector presents specific challenges, and AI agents need to be engineered with these specifics in mind. VE’s AI/ML engineers are experts at crafting AI agents for finance, healthcare, e-commerce, and legal tech, seamlessly integrating them with proprietary databases, compliance requirements, and real-time analytics platforms. Be it an AI financial analyst capable of retrieving and summarizing stock reports or a legal AI assistant that can extract key clauses from contracts, the goal is always to create solutions that make a meaningful impact.

- Building Powerful RAG Pipelines – Retrieval-Augmented Generation (RAG) is the backbone of intelligent, context-aware GenAI agents. AI experts at VE fine-tune embedding models and work with vector search technologies like FAISS, Pinecone, Weaviate, and LlamaIndex to ensure AI agents have instant access to relevant data. The difference between a generic AI assistant and a highly specialized one often comes down to how well the retrieval pipeline is optimized.

- Scaling AI Workflows with Multi-Agent Systems – A single AI agent can be powerful, but multiple AI agents working together can achieve significantly more. Our AI specialists design multi-agent systems that collaborate to handle complex workflows—one agent may retrieve data, another may analyze it, and a third may generate insights. With LangChain’s autonomous task delegation, persistent memory, and function calling, AI systems can be orchestrated to work together seamlessly, making them far more effective in enterprise environments.

- Seamless API and Database Integration – No great AI solution can exist in a vacuum. AI/ML engineers at VE make sure that LangChain-driven AI integrates smoothly with enterprise infrastructure, talking back and forth with relational databases such as PostgreSQL, MySQL, Snowflake, NoSQL databases such as MongoDB and Firebase, and cloud platforms such as AWS Lambda, Azure AI, and Google Cloud Functions. This makes our AI solutions feasible, scalable, and easily deployable in the real world.

- Optimizing Models for Cost and Performance – Choosing the right model is just the start. Our AI developers go beyond simple implementation, rigorously benchmarking LLMs, tuning hyperparameters, and structuring workflows that maximize efficiency while keeping token costs low. Whether it’s using OpenAI’s GPT-4-turbo, Meta’s LLaMA, or open-source alternatives like Mixtral, model selection is always based on the perfect balance of accuracy, speed, and cost-effectiveness.

Why Businesses Trust VE for LangChain-Powered AI Development

Building an AI agent isn’t just about plugging in a large language model. It’s about constructing intelligence that can retrieve, analyze, and act in a way that makes sense for real-world use cases. VE’s AI/ML engineers bring:

- Deep technical expertise in LangChain, vector databases, function calling, and multi-agent orchestration.

- Enterprise-grade solutions that prioritize scalability, security, and seamless system integration.

- Rapid prototyping and deployment for businesses looking to implement AI solutions quickly without compromising quality.

- Bespoke AI strategies that ensure every solution is tailored to the specific needs of an organization.

Generative AI is changing how businesses operate, and with LangChain, AI solutions can go beyond simple interactions to provide real intelligence. Engineers at VE have the skills and experience to make that happen—delivering AI agents that don’t just generate text but truly understand, reason, and act in meaningful ways.

Whether you’re building your first GenAI agent or scaling enterprise-wide AI workflows, VE’s globally experienced AI/ML engineers are your unfair advantage. Tell us your challenge—we’ll show you what’s possible.