A young radiologist stares at a chest CT scan. The patient’s life is at stake. The lesion is subclinical, hardly discernible from neighboring tissue, and minutes count. But the physician is not working alone. Hiding behind the screen is a robust convolutional neural network (CNN) trained on millions of labeled scans that is already detecting the lesion, quantifying malignancy probability, and cross-checking historic imaging patterns. In mere seconds, the system produces a report presenting the size of the nodule, its growth pattern, and similarity to known instances in The Cancer Imaging Archive (TCIA). The doctor breathes a little easier. Diagnosis just became faster, more accurate, and far more reliable.

This isn’t science fiction. It’s happening right now, in hospitals and research centers around the world. Computer vision, long seen as a niche AI field limited to facial recognition and autonomous vehicles, is now front and center in the war against cancer. And it’s transforming diagnostics, treatment planning, surgery, pathology, and even drug discovery in ways that would have been unthinkable just a few years ago.

So let’s peel back the layers and walk through how computer vision is changing the cancer care ecosystem—one frame, one cell, and one pixel at a time.

From Pixels to Predictions: How Computer Vision Works in Oncology

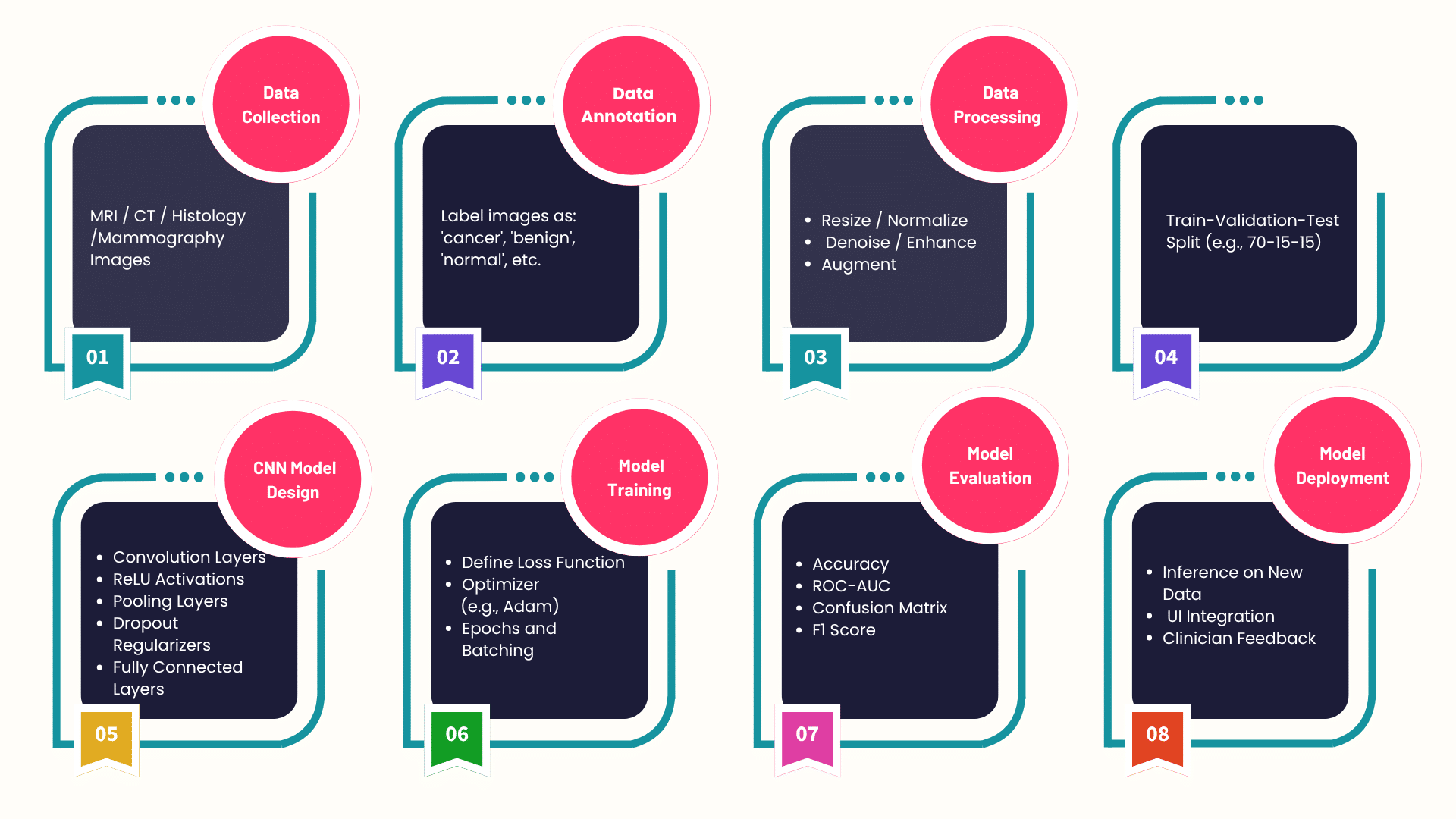

At its core, computer vision enables machines to interpret and analyze visual data. In the cancer domain, that means medical images—X-rays, MRIs, CT scans, PET scans, histopathology slides, and even real-time surgical video feeds. Computer vision algorithms are trained using supervised learning, semi-supervised learning and, more recently, self-supervised learning frameworks.

Some of the most commonly used models include:

- Convolutional Neural Networks (CNNs) for image classification and segmentation

- U-Net and V-Net architectures for medical image segmentation

- ResNet, DenseNet, and Inception for deep feature extraction

- Transformers in Vision (ViT) for global attention and contextual understanding

- Swin Transformers for scalable hierarchical vision modeling

- YOLOv7 and EfficientDet for real-time object detection, such as tumor boundary detection in MRI

- CLIP and SAM by Meta AI for zero-shot classification and advanced image segmentation

Early Detection: Computer Vision as a Diagnostic Powerhouse

Early cancer detection is quite literally the factor standing guard between life or death. Radiologists spend years learning to spot suspicious patterns, but even experienced professionals are bound to make mistakes because of fatigue or the complex nature of cases. This is where computer vision shines.

Lung Cancer Screening – Deep learning models like Google’s LYNA (Lymph Node Assistant) and DeepMind’s AlphaFold imaging extensions are already being deployed to detect lung nodules in low-dose CT scans. In fact, the LUNA16 dataset has powered the development of robust detection pipelines that outperform radiologists in sensitivity while maintaining specificity.

Breast Cancer Imaging – For mammography, products such as iCAD’s ProFound AI employ artificial intelligence (AI)-driven digital breast tomosynthesis (DBT) to identify possible malignancies with much higher detection rates. Open source datasets like CBIS-DDSM and INbreast are fueling innovation in this space.

Skin Cancer Classification – Models like ResNet50 and EfficientNet are being fine-tuned on datasets like ISIC (International Skin Imaging Collaboration) to distinguish between benign moles and melanoma, sometimes with accuracy on par with board-certified dermatologists.

Tumor Segmentation: Precision Mapping Down to the Micron

Segmentation is not just about drawing boundaries around a tumor. It’s about understanding its volume, morphology, vascularity, and relation to surrounding tissues. This is crucial for treatment planning, radiotherapy dose estimation, and surgical resection.

U-Net: The Gold Standard – Developed originally for biomedical image segmentation, U-Net is still a go-to architecture. It’s been upgraded and adapted into variants like Attention U-Net, 3D U-Net, and nnU-Net, which auto-configure themselves for a given dataset.

Vision Transformers in Segmentation – Newer segmentation models like TransUNet combine the localization power of CNNs with the long-range dependencies modeled by transformers. These are particularly useful in complex regions like the brain, where gliomas or metastases can be amorphous.

Multimodal Segmentation – By combining PET and MRI images using cross-modal CNNs or fusion transformers, researchers are achieving highly accurate segmentations that factor in both anatomical and metabolic cues. This is essential in cancers like glioblastoma, where aggressive cells often spread beyond what’s visible in a single modality.

Histopathology and Digital Pathology: AI at the Microscope

Traditional pathology involves viewing tissue slides under a microscope, a supremely time-consuming and subjective experience. Digital pathology, in conjunction with computer vision, is digitizing and improving this experience at scale.

Whole Slide Image (WSI) Analysis – WSIs are gigapixel images, and analyzing them manually is incredibly time-consuming. Vision models like HoVer-Net, PanNuke, and Cellpose are being used to segment nuclei, classify cells, and predict tissue malignancy.

Foundation Models for Pathology – Foundational vision-language models like BioViL, PathCLIP, and HEadS are trained to interpret tissue slides with textual annotations, allowing pathologists to search, compare, and retrieve similar cancer cases across massive slide repositories.

Tumor Grading and Biomarker Prediction – CV models can now predict not just the presence of cancer, but also the tumor grade and even biomarker expression like HER2, PD-L1, or Ki-67 directly from images, without the need for expensive immunohistochemistry tests. Tools like HistoQC and DeepPath are leading this revolution.

Cancer Surgery: Augmented Vision in the OR

Surgical oncology is another domain where real-time AI is saving lives. Accuracy is paramount during surgery and computer vision has enhanced it manifold.

Fluorescence-Guided Surgery – With computer vision models trained on fluorescence images, systems like TumorPaint or GLIOVISION help identify cancerous tissue in real time, guiding the scalpel with pixel-level accuracy.

Surgical Robotics and AR – Platforms like da Vinci Surgical System now incorporate AI modules for real-time tissue recognition. Combine this with Microsoft HoloLens and Unity-based AR apps, and you get overlays of the patient’s MRI data projected directly onto the surgical field.

Smart Endoscopy – In gastrointestinal oncology, AI-powered endoscopes use models like YOLOv7 and DETR to detect polyps and early lesions in the colon or esophagus. Real-time feedback is becoming standard in many high-end hospitals.

Treatment Monitoring: Tracking the Fight

After treatment starts, constant monitoring is necessary to track progress and adapt strategies. Computer vision enables the automation of tumor volume measurements and change detection.

Radiomics Meets Deep Learning – Platforms like PyRadiomics and MONAI Label extract hundreds of features—shape, texture, intensity—from serial imaging data. Combined with models like SwinIR or StyleGAN3, these tools help predict recurrence risk and treatment response.

MRI Delta Radiomics – By comparing temporal MRI scans, delta radiomics leverages vision models to detect minute changes in tumor biology that indicate response—or the lack thereof—to therapies.

Drug Discovery and Cell Behavior: Microscope Meets GPU

Cancer research increasingly involves analyzing how drugs affect cancer cells in vitro. Computer vision is speeding up this analysis tremendously.

Live Cell Imaging – Microscopy videos are processed using deep learning tools like DeepCell, CellProfiler, and Napari. These track cell division, migration, apoptosis, and morphological shifts across different drug concentrations.

Organoid and Spheroid Analysis – 3D cultures like organoids are better tumor models than flat cultures. Models like StarDist and Cellpose 3D can segment individual cells even in dense 3D structures, providing vital insights into how cancer cells behave in tissue-like environments.

Combating Data Scarcity: Federated and Self-Supervised Learning

Federated Learning – Frameworks like TensorFlow Federated, Flower, and MONAI FL allow hospitals to collaboratively train models without sharing raw data. Models move, data stays. This is powering projects like FeTS (Federated Tumor Segmentation Challenge), which brings together institutions to train on brain tumor MRI.

Self-Supervised Vision Models – In environments with limited labeled data, models like DINOv2, MAE (Masked Autoencoders), and SimCLR learn visual features by predicting missing patches or augmentations, eliminating the need for exhaustive manual labeling.

Ethics, Explainability, and Trust in the Loop

Explainable AI (XAI) Tools – Heatmap-based interpretability methods like Grad-CAM, Integrated Gradients, and SHAP for Vision help doctors see what the model focused on when making a decision. This is essential for model validation and adoption.

Regulatory Approvals – FDA-approved tools like Arterys Oncology AI, PathAI, and Qure.ai’s qXR have paved the way for broader adoption. These approvals demand rigorous testing and explainability, which modern CV pipelines are increasingly delivering.

How VE is contributing to the Fight Against Cancer

At VE, our AI and ML engineers are knee-deep in building next-generation computer vision tools for healthcare, particularly in oncology. We’re not just training models—we’re deploying scalable, privacy-conscious, explainable, and clinically validated solutions that plug directly into existing hospital ecosystems.

From building federated learning pipelines for anonymized tumor detection to developing smart annotation platforms powered by weak supervision, our teams can leverage frameworks like MONAI, Detectron2, Segment Anything, NVIDIA Clara, and FastAI to push the boundaries of what’s possible in cancer detection and treatment.

What Comes Next?

We’re standing on the edge of something massive. As we move toward multimodal AI agents that can combine vision, language, and structured data, cancer care will become more personalized and proactive.

- Multi-agent systems powered by LangChain, Haystack, and RAG pipelines will provide 360-degree patient assessments

- 3D vision and volumetric AI will make real-time 3D segmentation during surgery a standard

- Foundation models for medical imaging like BioGPT-ViT and Med-PaLM MIMIC-Vision will provide unified, flexible, and zero-shot capabilities

And as real-world data from hospitals, wearables, and smart imaging devices keeps streaming in, foundation models will only get smarter, faster, and safer.

Cancer is tough. But with computer vision by our side, we’re fighting back with greater accuracy than ever before.

VE’s computer vision specialists are invaluable assets for every enterprise that is considering developing their own computer vision capabilities. Tell us what you need and our experts will get back to you right away.